Previous PROJECTS

In this project we are collaborating with Mazor mental health center and Shaar Menashe mental health center to produce an alert system for critical events in the Hospital. Such events include sexual harassments, Suicide attempts, falling, escape attempts and more.

In a recent project at the lab, a violence detection project was completed successfully. We would like to extend this project to other events in mental health centers.

Another challenge in this project is that the videos obtained from security cameras do not contain sound, and also need to go thorough a process of anonymization, to protect the privacy of the patients and the staff in the center.

We would like to use technology advances to monitor; detect and even prevent such events. The goal is to build a Image Processing / Deep Learning based system(s) for the task of classification of these videos. Such a tool can help detect the events and allow quick intervention by the security staff to help keep a secure environment for all the staff and the patients.

In this project we will try to create a note sheet for piano that includes the correct fingers to use for playing the piece appropriately. Current note sheets include only notes, with proper instruction on how to play them. We will use Image Processing, Audio and Deep Learning to add the correct fingering position to the note sheets.

We collaborate with a non-profit organization called Haifa3D dedicated to developing electronic and smart prosthetic arms for people in need.

Previous research developed a robust robotic arm that can be programmed wirelessly to perform finger gestures in real time.

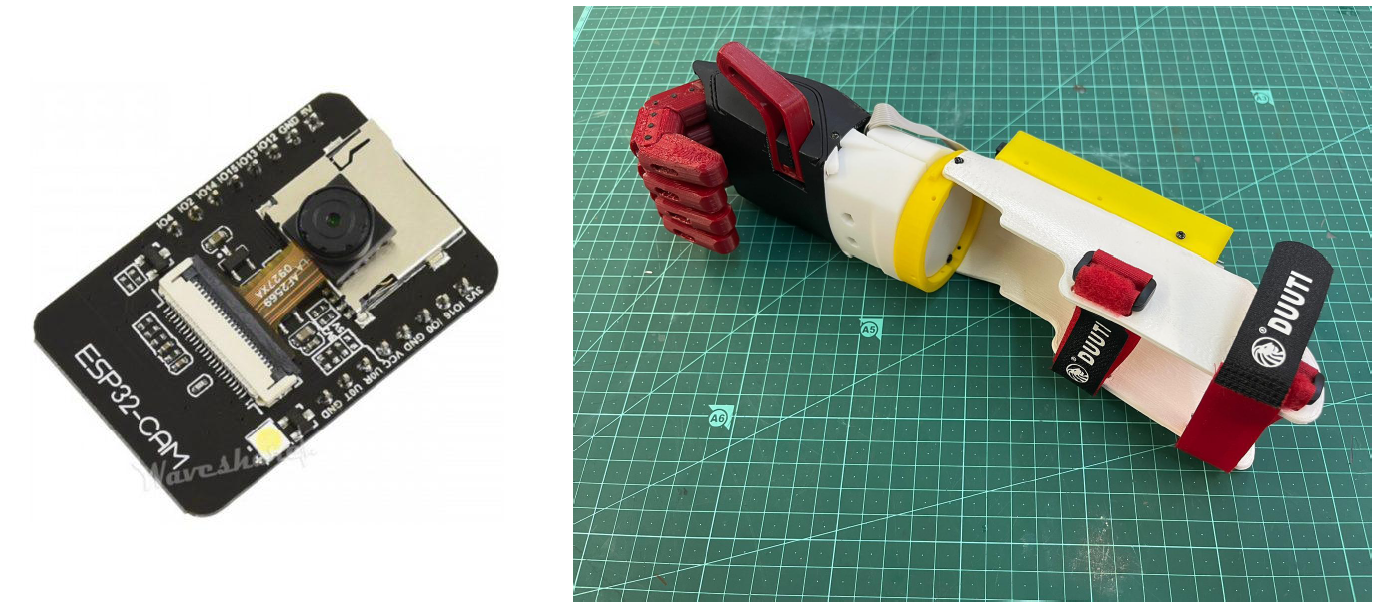

In this project, we are interested in the idea of having a camera attached to the hand palm, generating a video stream from the point of view of the hand, and making algorithmic decisions on whether to grasp or not. This means you will develop an algorithm to detect an object and develop heuristics to predict hand instructions. There are many possibilities regarding the perception phase, such as implementations of pretrained models to detect objects or estimate depth, and Computer Vision algorithms to detect contours and shapes of objects.

You will be given an ESP32 module, a dedicated camera, and a programmable robotic hand. Your job is to implement a perception algorithm to detect objects or shapes and provide grasping instructions, and also perform a connection to the robotic hand and test the system.

Magnetic Resonance Imaging (MRI) is a leading modality in medical imaging since it is non-invasive and produces excellent contrast. However, the long acquisition time of MRI currently prohibits its use in many applications – such as cardiac imaging, emergency rooms etc. During the past few years, compressed sensing and deep learning have been in the forefront of MR image reconstruction, leading to great improvement in image quality with reduced scan times. In this project, we will work towards building novel techniques to push the current benchmarks in deep learning based MRI.

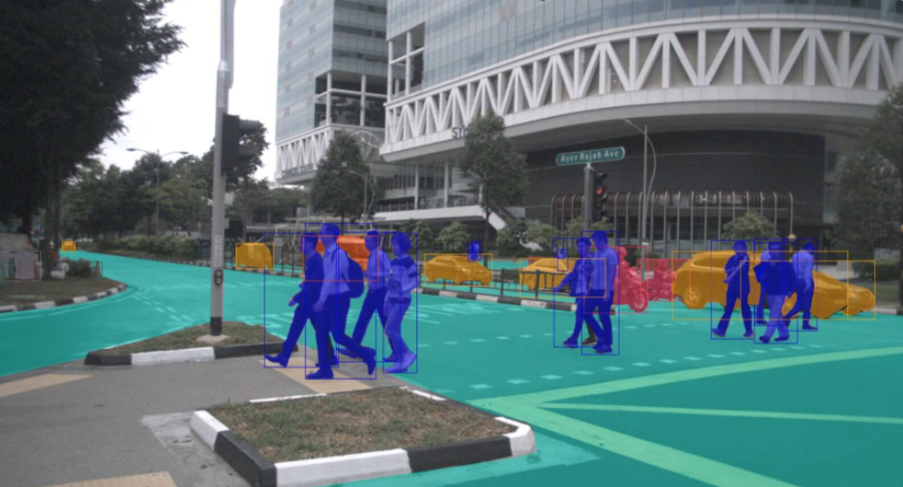

Real-time sensor fusion is critical for autonomous vehicles, which rely on a complex interplay of LiDAR, camera images, and radar data. To make critical navigation decisions in real-time, on-board deep learning models must efficiently process and integrate this sensor data directly within the vehicle, all while operating under limited hardware resources.

Quantization techniques offer a promising approach. By reducing the precision of the model’s calculations, quantization can significantly decrease inference time without sacrificing accuracy. This enables faster decision-making for autonomous vehicles, a crucial factor for safe and reliable navigation.

In this project, you will use Post-Training Quantization techniques for both Image encoder [1] and PointCloud [2] to improve the inference time of multi-modality models for autonomous driving [3].

[1] https://arxiv.org/pdf/2102.05426.pdf

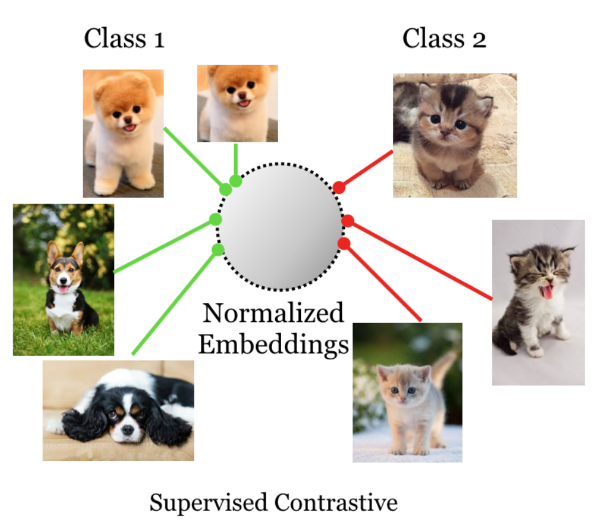

Inspired by the idea of learning features in a hierarchical manner in deep neural networks, we aim to improve SupContrast [1] by encouraging the network to learn close features of super-classes in early stages in the network. (Low level features will be closer for dogs and cats, but further for dogs and aircrafts). SupContrast improvements were shown to be effective in class imbalance settings [3].

[1] https://arxiv.org/pdf/2004.11362.pdf

Predicting injuries among combat soldiers is critical for a variety of reasons [1]. First and foremost, it protects soldiers’ health and safety while reducing the psychological and physical effects of combat. Second, injury prediction contributes to mission success by facilitating improved resource allocation and planning, which sustains operational effectiveness and unit readiness. It also helps with cost-cutting, effective resource management, and long-term health issues, which benefits society and the military by lowering healthcare costs and the proportion of disabled veterans. In the end, this proactive strategy promotes soldier morale while simultaneously ensuring the success and continuity of military operations.

We are looking for students with some experience writing DL algorithms for an influential project that is a joint venture between the physical therapy department of Haifa University and VISTA lab. funded by the IDF on Wearables and Injuries in Combat Soldiers.

The study seeks to forecast injuries and identify the critical factors influencing soldier injuries. Our goal is to achieve this by utilizing data collected from wearables that Golani troops wore for a duration of six months.

In this project, you will analyze and create machine learning models that can identify critical elements influencing soldiers’ well-being and forecasting models that can anticipate injuries.

Contact Barak Gahtan if you are driven to work on a project involving real and big data to improve combat soldiers’ readiness.

[1] Papadakis N, Havenetidis K, Papadopoulos D, et al

Employing body-fixed sensors and machine learning to predict physical

activity in military personnel BMJ Mil Health 2023;169:152-156.

When quantizing a neural network, it is often desired to set different bitwidth for different layers. To that end, we need to derive a method to measure the effect of quantization errors in individual layers on the overall model prediction accuracy. Then, by combining the effect caused by all layers, the optimal bit-width can be decided for each layer. Without such a measure, an exhaustive search for optimal bitwidth on each layer is required, which makes the quantization process less efficient.

The cosine-similarity, mean-square-error (MSE) and signal-to-noise-ratio (SNR) have all been proposed as metrics to measure the sensitivity of DNN layers to quantization. We have shown that the cosine-similarity measure has significant benefits compared to the MSE measure. Yet, there is no theoretical analysis to show how these measures relate to the accuracy of the DNN model.

In this project, we would like to conduct a theoretical and empirical investigation to find out how quantization at the layer domain effects noise in the feature domain. Considering first classification tasks, there should be a minimal noise level that cause miss-classification at the last layer (softmax). This error level can now be propagated backwards to set the tolerance to noise at other lower layers. We might be able to borrow insights and models from communication systems where noise accumulation was extensively studied.

It has been shown that it is possible to significantly quantize both the activations and weights of neural networks when used during propagation, while preserving near-state-of-the-art performance on standard benchmarks. Many efforts are being done to leverage these observations suggesting low precision hardware (Intel, NVIDA, etc). Parallel efforts are also devoted to design efficient models that can run on CPU, or even on the mobile phone. The idea is to use extremely computation efficient architectures (i.e., architectures with much less parameters compared to the traditional architectures) that maintain comparable accuracy while achieving significant speedups.

In this project we would like to study the trade-offs between quantization and over-parameterization of neural networks from a theoretical perspective. At a higher level we would like to study how these efforts for optimizing the number of operations interacts with the parallel efforts of network quantization. Would future models be harder for quantization? Can HW support of non-uniform quantization be helpful here?

Activision functions are a crucial part of deep neural networks, as a nonlinear transformation of the features.

In this project we will aim to burn an FPGA accelerator and measure the performance of different activations, including some that we developed here in the lab, to help the algorithm developers and see another perspective of choosing the architecture.