Research

Camera obscura (Latin for dark chamber) was probably the first imaging device conceived by man. Light rays penetrating through a pinhole in the blinds of a darkened room projected onto the opposite wall an upside-down image of the landscape outside. Such designs existed at least since medieval times to entertain nobility and, later, as an aid to painters. Surprisingly, despite a huge leap in all other aspects of technology, the making of practically all modern cameras, from a high-end DSLR to a mobile phone camera, remains conceptually identical to that of a camera obscura. An objective lens — an optical system replacing the archaic pinhole collects the light and forms a tiny flipped image of the world in front of the camera. This image is picked up by an array of photosensitive detectors (pixels) translating the light into a digital picture. Modern computational technologies challenge this long-lasting design.

... more infoComputational photography is an emerging branch of imaging sciences combining the design of optics, image sensor electronics, and a computational system working in concert to produce an image. The cardinal difference with conventional photography is that the image formed by the optics on the sensor (or a system of sensors) is no more necessarily intelligible to the human eye, but does contain all the information that the computational process needs in order to reconstruct an image. Computational cameras promise to revolutionize photography by reducing the camera cost, form factor, and energy requirements as well as allowing novel imaging capabilities such as hyperspectral or depth imaging. One of the focuses of our research is the use of modern machine learning techniques in computational imaging, and in active and passive 3D imaging in particular.

The pursuit of small form factor, low cost, ever-growing resolution, and uncompromised light sensitivity is putting stringent constraints on the design of modern mobile cameras, such as those we carry with us inside our smartphones. Form factor reduction coupled with the increase in resolution reduces the amount of light collected by a single pixel and results in noisy images. To some extent, this can be countered by increasing the aperture area (decreasing the ratio between the focal distance and the aperture diameter that photographers refer to as F#). However, a decrease in F# reduces the depth-of-field of a camera, meaning that only objects in a limited interval of depths around the point at which the camera is focused will stay in focus, while closer and farther objects will appear blurred. To address this challenge, we designed a special optical system with a phase-encoded aperture.

By placing a piece of glass with tiny a elevated pattern in the pupil of a regular imaging lens allows to focus different wavelengths at different depths in the scene — for example, the red channel may be focused on nearby objects, the green in the middle of the scene, while distant objects stay in focus in the blue channel. An image created by such a camera will appear weirdly blurred to the human eye, but a specially designed computational process recovers a sharp image at a very large range of depths, thus extending the camera’s depth-of-field while still enjoying the light efficiency of a large aperture. We employed machine learning techniques producing not only an optimal way to perform image reconstruction but also simultaneously optimizing the parameters of the optical system that we actually manufactured. As a bonus, our system produces a high-quality depth image indicating the distance of the objects from the camera — with a single exposure, single aperture, and no active illumination of the scene.

We also work on exotic image sensors and imaging systems combining coded active illumination and a combination of several imaging modalities.

... closeModern machine learning techniques promise to revolutionize the way we perform signal and image processing. One of the biggest challenges in signal processing is the construction of a faithful signal model that can be asserted even before any measurement thereof has been taken (a prior). The more accurate such a model is, the less data we need to achieve a specific task such as reconstructing a signal from partial or corrupt measurements. Our research combines modeling and learning and aims at solving challenging real-world signal and image processing problems such as sound separation (e.g., separating the vocals from a music) and image denoising.

... more infoResearch highlight: Every modern digital camera contains a piece of hardware known as an image signal processing pipeline (ISP) that is responsible for taking the raw pixels from the sensor and producing a good-looking photograph or video. ISPs typically have multiple stages performing demosaicing (interpolating the missing pixels created by the color filter array superimposed on top of the pixels that allows color imaging), denoising, and color and intensity correction just to mention a few.

These steps are performed independently from each other, which results in a waste of silicon area and power. We designed DeepISP — an end-to-end learned image processing pipeline that does all these steps at once. Our experiments show that our design produces high image quality (both objective and subjective) and is more computationally efficient. Our design is particularly good at enhancing and removing the noise from low-light images.

... closeComputer vision aims at understanding and interpreting the world through its image, in a way mimicking vision processes in humans and animals. Since its establishment several decades ago, this field developed steadily, attempting to model and understand various vision-related phenomena from the low-level (e.g., segmenting the boundaries of an object in a scene) up to the high level (e.g., recognizing various objects, their relations, and reasoning on what is going on in the scene). It was only at the beginning of our decade, however, that computer vision experience a quantum leap due to the advent of deep learning. The sweeping success of deep neural networks has (successfully) transformed machine vision into an application of machine learning, demonstrating, to the frustration on many researchers, that in many real-world problems it is better to (sometimes blindly) learn a model from examples rather than building it by hand, even if based on deep domain knowledge.

... more infoBefore the deep learning landslide, we worked on classical aspects of computer vision such as model fitting and feature detection. Nowadays, our focus has shifted more toward a healthy combination of domain-specific modeling and learning.

... closeA magic tool for peeking inside the workings of our body and seeing what is invisible to the eye without actually cutting the flesh has certainly captivated the imagination of physicians and anatomists since ancient times. Today, thanks to our understanding of various physical phenomena such as penetrating electromagnetic radiation, acoustic waves, nuclear magnetic resonance, or electrophysiological activity, this task requires no more magic. Physics combined with a lot of engineering allows seeing the invisible inside the body. Modern medical imaging methods across a variety of their modalities rely heavily on signal and image processing. In fact, the signals collected by the detectors in a CT or antennas in an MRI machine are not directly intelligible — it takes a computational process to reconstruct an image that a doctor can interpret.

... more infoThe reconstruction of the first CT images in the ‘70s could take hours and used very basic algorithms, which still earned the Nobel Prize in medicine to its inventors. With the increase of computational power at our service, the use of more sophisticated signal processing and machine learning promises to revolutionize the field.

Our research focuses on several aspects of medical imaging and medical data analysis. Firstly, we employ modern machine learning techniques in order to improve the quality of the images produced by existing imaging modalities and extend their applicability to previously infeasible settings. This, for example, includes real-time improvement of contrast and frame rate of medical ultrasound. Secondly, we use data modeling and signal processing in order to create new imaging modalities such as a combination of acoustic excitation with regular EEG imaging allowing to capture the electric activity of just a small portion of the brain and separate it from the incredible amount of electromagnetic interference produced by the surrounding brain areas. Lastly, we use machine learning methods to analyze medical data in order to diagnose and predict pathologies.

... closeComputer vision aims at endowing computers with the ability to understand the visual world. Since the establishment of the field several decades ago, great progress has been achieved in understanding visual information in the form of 2D images. The progress has been much less spectacular in the case of other forms of regularly sampled data like 3D voxel grids or videos; other (irregular) representations, such as 3D point clouds and meshes received even less attention and until recently have been predominantly used in synthetic scenarios like computer-generated environments. The main reason for this gap lies in the availability of different data forms. While digital photography spawned a deluge of two-dimensional images, 3D data have required, until recently, special expertise and equipment owned by a few.

The importance of understanding 3D data is hard to overestimate. The world around us is three dimensional, and thus only a 3D representation conveys full information about its structure.

... more info3D is also often a much more compact way of representing objects, otherwise described by a very large number of 2D projections.

The advent of ubiquitous low-cost depth sensing technologies now allows virtually anyone to generate 3D data. Microsoft Kinect and Intel RealSense made 3D imaging an affordable commodity, while Apple, in its iPhoneX, was among the first to make a 3D sensor our intimate companion. If the history of digital imaging can be used to extrapolate the trend in depth imaging, we are going to face an exponential growth of user-generated 3D content. In order to enable future 3D sensing-based technologies such as autonomous agents that can interact with the environment, it is crucial to provide automatic tools and algorithms for manipulating and understanding 3D data.

Several reasons make understanding and manipulating 3D data challenging. The first reason is representation. While digital images are represented as 2D matrices, in 3D this is not the case. Representations come in many forms and are mainly determined by the type of a sensor and the target application. Secondly, 3D data are presently much scarcer. Most state-of-the-art algorithms are based on learning from huge amounts of data. Without them, special care has to be taken so as to structure the learning using domain knowledge. Another big challenge is transformation invariance: a large portion of the world around us may undergo non-rigid transformations. Treating each deformation as a distinct shape would be intractable and therefore special tools are required to incorporate invariance assumptions.

Our research focuses on several aspects of 3D vision. Firstly, we design and build sensors allowing to digitize the geometry of the physical world — both dedicated 3D cameras as well as computational techniques allowing to infer depth as a byproduct in some form of imaging. We have accumulated a vast experience with all the facets of the designing, productizing and manufacturing of depth sensing technologies. Secondly, we work on solving fundamental problems in computational shape analysis such as establishing correspondence between shapes that undergo a deformation and, in addition, are viewed only partially and in the presence of clutter; determining transformation-invariant similarity between shapes; finding compact representations for geometric objects allowing their efficient retrieval in a large scale database; and manipulating deformable objects such as the human body or face and synthesizing them ex novo. We also build cool applications on top of these methods.

... closeDeep neural networks (DNNs) have become the first-choice machine learning methodology for numerous tasks across different domains including computer vision and audio, speech, and natural language processing. In 2012 deep learning came to the scene with a bang showing a 50% improvement on the ImageNet visual recognition task. In the years that followed, various DNN architectures that have been proposed for this task brought the error rates below the average human performance. Around the same time, a similar sharp improvement was achieved in speech recognition performance. Since then DNNs have conquered a sweepingly undisputable leadership in the supervised machine learning tasks, achieving spectacular results in visual detection and recognition, image classification and machine translation, and are among the state-of-the-art methods in other regimes such as reinforcement learning.

... more infoThe tremendous advantages of DNNs come at the price of computational complexity. Modern deep neural models typically contain millions of parameters occupying many megabytes of memory and require hundreds of millions of arithmetic operations per output. This gives rise to significant hardware architecture challenges both during training and inference, especially in real-time and other time-critical applications. The most commonly used hardware accelerator for DNNs, are graphics processing units (GPUs); while being able to deliver the required computational throughput, their power consumption precludes the use of GPUs in energy-constrained settings. For this reason, GPU-based DNN accelerators are rarely found on battery-powered platforms such as drones, smartphones, and Internet-of-things devices, which typically opt for custom low-power hardware, such as FPGA and ASIC chips. While significantly reducing the power footprint, FPGAs and ASICs are constrained by a relatively modest amount of on-chip memory, which puts severe limitations on the size of the deep neural models that can run on such chips. One of the focuses of our research is to design a full stack of deep learning from efficient hardware architecture and its FPGA implementation up to hardware-aware training algorithms that make hardware-related tradeoffs explicit and satisfy various constraints such as power, silicon size, and memory footprint.

Another low light of modern deep learning techniques is their poor theoretical explanation (some people even compare deep learning to alchemy). Our research aims at elucidating the workings of deep neural networks by bridging between the empirically successful field deep learning with the theoretically founded world of data models, sparse and redundant representations, and compressed sensing. Borrowing tools from these domains gives a fresh new look and a tiny step toward answering one of the biggest questions in deep learning: why the heck it works?

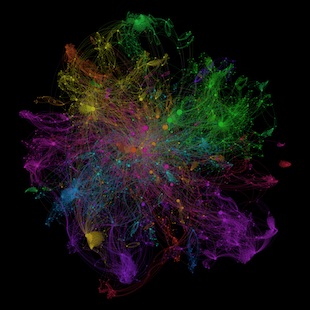

Lastly, while being spectacularly successful on ordered data such as time signals and images, deep learning faces many barriers when it encounters unordered data such as point clouds, meshes, and graphs. For example, it is not even clear what is the analogy of a convolutional neural network on a graph. The need to learn from such types of data arises from a variety of fields ranging from social networks to computational biology and drug design. We research into the use of various geometric tools, in particular, those borrowed from 3D vision and computational shape analysis, allowing to construct DNNs on non-Euclidean domains and unstructured data.

... closeOur research focuses on building new machine learning and statistical analysis tools to describe structure-property relations of molecules, shed light on protein structure and its relation to genetic coding, and generate molecules with prescribed properties.

... more infoResearch highlight: Fifty years ago, Christian Anfinsen conducted a series of remarkable experiments on ribonuclease proteins — enzymes that “cut” RNA molecules in all living cells and are essential to many biological processes. Anfinsen showed that when the enzyme was “unfolded” by a denaturing agent it lost its function, and when the agent was removed, the protein regained its function. He concluded that the function of a protein was entirely determined by its 3D structure, and the latter, in turn, was entirely determined by the electrostatic forces and thermodynamics of the sequence of amino acids composing the protein. This work, that earned Anfinsen his Nobel prize in 1972, led to the “one sequence, one structure” principle that remains one of the central dogmas in molecular biology. However, within the cell, protein chains are not formed in isolation, to fold alone once produced. Rather, they are translated from genetic coding instructions (for which many synonymous versions exist to code a single amino acid sequence) and begin to fold before the chain has fully formed through a process known as co-translational folding. The effect of coding and co-translational folding mechanisms on the final protein structure are not well understood and there are no studies showing side-by-side structural analysis of protein pairs having alternative synonymous coding.

In our works, we used the wealth of high-resolution protein structures available in the Protein Data Bank (PDB) to computationally explore the association between genetic coding and local protein structure. We observed a surprising statistical dependence between the two that is not readily explainable by known effects. In order to establish the causal direction (i.e., to unambiguously demonstrate that a synonymous mutation may lead to structural changes), we looked for suitable experimental targets. An ideal target would be a protein that has more than one stable conformations; by changing the coding, the protein might get kinetically trapped into a different experimentally measurable conformation. To our surprise, an attentive study of the PDB data indicated that a very considerable fraction of experimentally resolved protein structures exist as an ensemble of several stable conformations thermodynamically isolated from each other — in clear violation to Anfinsen’s dogma.

... close